Understanding the Battlefield

To start, we should define our terms and clear-up some common misconceptions in this arena.

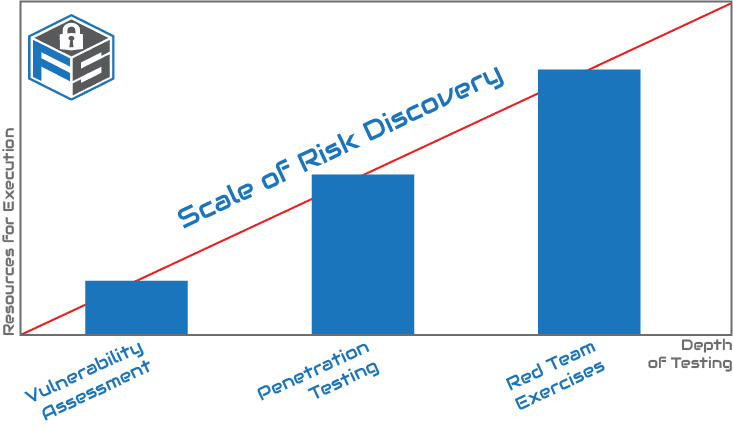

A penetration test (pentest) is designed to identify as many known types of vulnerabilities presented within the security of an organisation (or specified technical asset) as possible. As these are identified, the role of a pentester is to then voraciously ascertain the potential damage that might otherwise be inflicted by a genuine threat actor through exploiting these exploits in a safe manner to see how far they might go in terms of the scale and seriousness of the mock compromise. And importantly, how exploits may be interwoven to further inform and bolster each other and make available other attacks.

Pentesting engagements are much more sophisticated than a simple vulnerability assessment (VA) which typically identifies vulnerabilities, but doesn’t attempt to verify or rank the seriousness of risks associated, nor use new found information to feed back into the attack loop. VA’s lack the human-factor that provides ingenuity and creativity in a proper pentest. Moreover, a pentest assignment is usually charged with making recommendations for improvement to guard against the identified exploits.

Much like pentesting, Red Teaming at its core, pits attackers against the defences of an organisation to ascertain risks and potential, otherwise unseen vectors for attacks. The primary differences between red team engagements and pentesters is depth. While pentesting does go ‘down the rabbit hole’ to explore the potential scope of possible exploits, red teams hold in primacy the degree to which they can infiltrate/exfiltrate, and the ascertainment of the detection and response competence of the target organisation. Red teams aren’t as interested in identifying and ranking as many vulnerabilities that can be found, but rather just how far ‘the one’ might lead toward serious compromise. That is to say, they may indeed successfully attempt several vectors, but they explicitly aren’t testing all shortcomings – just the one(s) that will achieve their objective.

As the red team is typically charged with a specific objective, not a simple broad sweep of an organisations security capability, this usually becomes a wargame without the defined rules of engagement found in a vulnerability assessment or penetration test. They typically also take longer to execute than a penetration test, as more reconnaissance work is often involved, footprinting the aspects of the target organisation outside of a typical pentest like social engineering, physical security, etc.

By way of example, a red team exercise might capture how a member of the executive team inside the target organisations always tweets a picture of his coffee which included geotags. This might then lead the red team to stakeout this location to note where and when he was each morning. From there, they may conduct physical surveillance which would identify the make, model, and license plate of his car. The red team may then jam the signal from the keyfob with a white noise generator covering the MHz range for most cars. Then, with the car unlocked, the attacker has unfettered access to the corporate laptop to deposit malware or a keylogger to use as a beachhead for a bigger attack.

Now the blue team is most often internal team members within an organisation, responsible for security. This may be the core IT team along with security specialists, as well as those charged with physical security like security guards that limit physical access to the building. Their function is certainly more passive in the Red Vs Blue style engagement, as the defences should already be in situ. The primary responsibilities are to ensure security measures in place have hardened existing systems – likely through strong fundamentals in IT like patching. Their role in this game becomes more about how their existing incident response works.

We’re limited in scope for this article to deep-dive on many of these practices, so we will be creating a separate piece focused on blue team best practice.

The “Red vs. Blue” Dynamic

The idea of red and blue teams goes back to military wargames. People in the same army engage in mock combat to test and sharpen their skills. Putting allies into an adversarial role motivates and excites them, and gives them an opponent to measure themselves against. Each side tries to beat the other, and both sides should come out stronger for it. Even though they take adversarial roles, they’re ultimately on the same side. In our context, their goal is to discover and eliminate any weaknesses in the security of a system or network. Each side ought to learn from and adapt to the successes and failures of the other. Even external firms which red teams often are, can benefit by their attacks becoming more creative and focused when they encounter capable blue teams. It’s an arms race, but one where every event should be a positive outcome. Iron sharpens iron, afterall.

It’s natural for adversaries to be reluctant to cooperate. They forget their original purpose and concentrate on winning. The company employing a red team (either internal staff or an external specialist team) requires complete and total transparency from both sides. If it was successfully attacked, it needs to know exactly how, and where its defences and protocols had shortcomings. It needs to be aware of the issues and remediate against them. This requires coordination of information from the two sides. It requires, to a certain extent, cooperation instead of purely competition.

This dynamic can run afoul if competition leads to concealing information. While red team consultancies are obligated to disclose their methods, internal teams can be less forthcoming, even if not deliberately so. After all, it’s harder to stop an attack if you don’t know how it works, so it’s vital if using internal members to ensure they document their processes. Conversely, the blue team could try to make itself look more effective than it really is, and down-play the scope of any compromise. The element of ego can best be removed by strong messaging that this is for the betterment of the company and an exercise, not a method to audit the capabilities or work of the respective teams.

Adding The Purple Team

Sometimes we hear the idea that a “purple team” is needed to act as a bridge between them; the two sides need to share information in order to improve security. But the definition is vague and ill defined at best, and has been interpreted in several ways. Even cursory research turns up various proposals for a purple team to overcome the lack of communication in security testing. There is a lot of variation in just what that is supposed to mean. The most logical definition is that a purple ‘team’ doesn’t mean a separate, permanent team or function like red or blue. But then what does it mean? Is it necessary if the two primary teams are doing their jobs?

One alternate perspective is that instead of having separate teams for attack and defence, the company has a single team whose members participate in both roles. The same person may be an attacker in one project and a defender in another. Our experience at Fort Safe is that this doesn’t work very well, since the reds are usually outsiders who specialise in innovative, and often highly technical attacks, while the blues are the people in the company who already know the system and its defences, and therefore best equipped to shore up defences if they’re aware of any potential issues or vectors for attacks.

Another idea is that the purple team really isn’t a team as such, but a process or concept of information sharing. While sharing information is certainly the goal, this definition doesn’t tell us much about how to accomplish it.

Some commentary posits that a purple team is a temporary group which steps in when the red and blue teams aren’t doing their jobs properly. This leaves open the question of how an ad hoc team is going to make communication happen after things have gone awry.

While this is mostly a technical environment, the idea of ‘purple’ needs to consider a wider aspect, incorporating – at least in part – elements of organisational and behavioral psychology covering physical security and preparedness against social engineering attacks and the like too.

A better way to think about it is to step back to the fact that both teams are chasing the same objective. They take an adversarial role because competition stimulates them. But — this is crucial — they shouldn’t be adversarial all the time, but rather should, when the situation calls for it, combine into a purple team to review what they’re doing. They need to be able to switch between competitive and cooperative modes.

What The Purple Team Needs To Do

So then, it seems rational that the purple team is just the red and blue teams getting together and exchanging information. The blues need to let the reds know what got through, what was stopped, and the specific methods employed. Conversely, the reds need to present their successes in a format which the blues can understand and use. They might include successful penetrations which the blue team didn’t notice, and a full report complete with recommendations for remediation.

Information sharing advances a counterintuitive goal. The information from the blues should help the reds to concoct more effective attacks. The information which the reds provide should let the blues improve their defences. It isn’t easy to get people to switch modes this way from a behavioural standpoint, so we need to lean on proven frameworks and resources that have demonstrably been shown to work, namely establishing this kind of behaviour as typical – or rather expected – within the organisation as a whole.

Objective standards for expected information and behaviour will keep both teams ‘honest’. The red team needs to keep a record of all the tests it conducts and their results, both successful and unsuccessful. The blue team needs to extract information from its logs to show what attempts were detected and what the results were. Between them, there has to be a process for comparing their relative reports, so that the company can tell what attacks worked, whether successful ones were mitigated, and which ones escaped notice.

How To Think Purple Team

People in competition tend to think of each other as foes. Fans of opposing football teams can become fanatical (which is where the word “fan” comes from) even though there’s no moral difference between their teams. Politicians supposedly all work for the good of their country, but partisan differences can become more important than their nominal goals. The aim of beating the opponent is a deep-rooted part of human psychology for many individuals.

The interrelationship between competition and cooperation is a complex one. Academics have looked into the psychology of balancing cooperation and competition and we can inject some of the core concepts to engender clearer communication in this context. People often try to do both at the same time, and the resulting behaviours can be incongruous with the improvements sought from the exercise.

The best results come with leaders whose goal is to bring the two sides together. They should cultivate good relations with both teams, and act as both coordinator and mediator. They need to remind each side that its goal isn’t to do better than the other, but to do its own job superlatively. A ‘purple event’ at the culmination of the exercise works as a better mental frame of reference than the term ‘purple team’.

Not every team gets carried away with the competitive mania. If they keep their real goals in mind, they’ll provide the information which the company needs to eliminate weaknesses and strengthen its defences. As long as they do this, the purple team emerges naturally, without any special effort. However, management should always keep its eyes open to make sure information and subjective feedback is flowing as it should in a prosocial atmosphere. If a mindset of ‘winning at all costs’ starts to emerge, it ought to be countered as soon as it’s discovered. One way or another, the promotion of cooperation needs to be part of every pentesting project.

Red and Blue – Two Sides, One Goal

The red and blue teams are aiming for the same thing: improved system security. Sometimes they have to step back from their respective roles in order to focus on the ultimate goal. Doing this requires breaking away from reflexively competitive ways of thinking.It is the primary function of management to ensure that their competitive spirit allows for the best performance, but stops short of undermining the ultimate outcomes; insights into security posture and its fundamental improvement.

Getting the red and blue teams to resolve themselves, when necessary, into a single team (or rather at a single place, a ‘purple event’) that examines the overall picture is a tricky matter. Leadership skills and constant communication help to keep the division between the outsiders storming the barricades and the insiders defending them from becoming an obsession. Both teams win when the teams work together.

To find out more about how to facilitate this incredibly important capability within your organisation; speak with Fort Safe today.